Climate change, a pressing global challenge, has become a focus of scientific inquiry and public discourse. When did humanity first recognize the profound implications of this phenomenon? Tracing the lineage of climate science reveals a fascinating narrative woven through time, showcasing the gradual evolution of understanding that eventually led to today’s awareness of climate change.

As early as the Enlightenment in the 18th century, natural philosophers began pondering the intricate relationship between humans and their environments. However, formal acknowledgment of climate change as we understand it today emerged much later. The initial spark was ignited in the mid-19th century when scientists became intrigued by the world’s atmospheric composition.

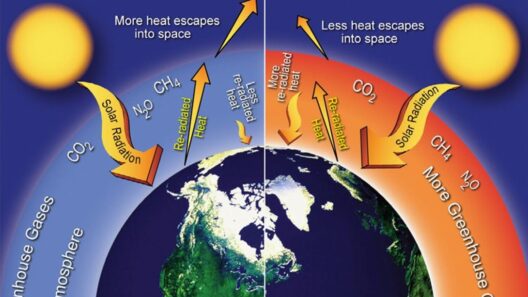

In 1824, Joseph Fourier, a French mathematician, proposed the concept of the greenhouse effect. He suggested that the Earth’s atmosphere could trap heat, thus maintaining a habitable temperature. This insight set the stage for a paradigm shift in scientific thought. But imagine—what if Fourier had lacked the tools to communicate his findings? Would climate change have been recognized sooner? Herein lies a perplexing challenge: the intersection of scientific discovery and societal comprehension.

Fourier’s ideas were codified further by John Tyndall, an Irish scientist, in 1859. Tyndall conducted experiments that demonstrated how certain gases, notably carbon dioxide (CO₂) and water vapor, absorb infrared radiation. His work provided empirical evidence supporting Fourier’s theoretical notions. However, it was not until the early 20th century that the implications of these findings began to permeate public consciousness.

Simultaneously, the burgeoning field of meteorology began to flourish. The establishment of the first weather stations and the advancement of instrumentation enabled scientists to collect atmospheric data systematically. By the 1930s, increased carbon emissions from industrial activities prompted early warnings about potential climatic repercussions. Scientists such as Guy Stewart Callendar began to draw connections between rising CO₂ levels and global temperatures, foreseeing the ramifications of unchecked fossil fuel consumption.

Yet, mainstream acceptance of climate change as a crucial issue remained elusive. Throughout the mid-20th century, while research continued, the focus of scientific inquiry often diverged onto other paths. The post-World War II economic boom, with its burgeoning industries and urbanization, further exacerbated atmospheric carbon levels, yet few heeded the warning signs.

The 1970s heralded a pivotal shift in climate science awareness. A growing cadre of scientists began to articulate the perils of anthropogenic climate change with greater fervor. In 1972, the United Nations Conference on the Human Environment in Stockholm underscored the importance of environmental protection, spotlighting climate change as a global threat. Scientists like Dr. J. Murray Mitchell Jr. published studies that linked human activities to long-term climatic changes, laying the foundation for future research. But here’s an engaging question to ponder: did society have enough foresight to take actionable steps against the mounting climate crisis?

As the decades rolled forward, the establishment of the Intergovernmental Panel on Climate Change (IPCC) in 1988 marked a significant milestone in the global acknowledgment of climate change. This intergovernmental body sought to assess scientific information relevant to climate change, generating comprehensive reports that consolidated research from across the globe. The IPCC’s findings emerged as both alarming and urgent, providing a clarion call for immediate action.

Public discourse around climate change gained momentum as high-profile events—the 1992 Earth Summit in Rio de Janeiro and the adoption of the Kyoto Protocol in 1997—solidified climate change within international policy frameworks. Yet, the challenge persisted. While scientific knowledge advanced, denial and skepticism emerged as formidable barriers, often fueled by political agendas and economic interests.

In the early 21st century, the convergence of scientific data, environmental advocacy, and media coverage contributed to a more profound public awareness of climate change. The release of Al Gore’s documentary, “An Inconvenient Truth,” in 2006 evoked significant emotional responses and ignited conversations across socio-political boundaries. The overwhelming consensus among scientists underscored climate change’s human origin and the urgent need for sustainable practices. Yet, amidst this heightened consciousness, one must ask: are we prepared to challenge the systemic inequities that perpetuate climate injustice?

Today, the urgency of climate change is indisputable, with climate scientists and activists converging to demand transformative action. The trajectory of climate science illustrates how our understanding evolved from mere curiosity to an unequivocal acknowledgment of a crisis that affects us all. The awareness around climate change now encompasses not only environmental justice but also economic and social dimensions, urging societies to adopt holistic strategies for mitigation.

In conclusion, the recognition of climate change has undergone a profound metamorphosis, shaped by scientific inquiry, societal values, and global governance. From the musings of early natural philosophers to the modern-day calls for climate accountability, human perception of climate change has evolved dramatically. As we stand at this crossroads, the lingering challenge remains: how will we navigate the shifting tides of climate action, fostering an inclusive approach that prioritizes the planet and its people?